-

Business processes on Azure Durable Functions

Azure Durable Functions simplify business processes with code-first development, automatic state management, and cloud-native infrastructure.

-

Complexity in software development

Software complexity is a challenge for software teams. In this article, I discuss some different sources of complexity and how to deal with them.

-

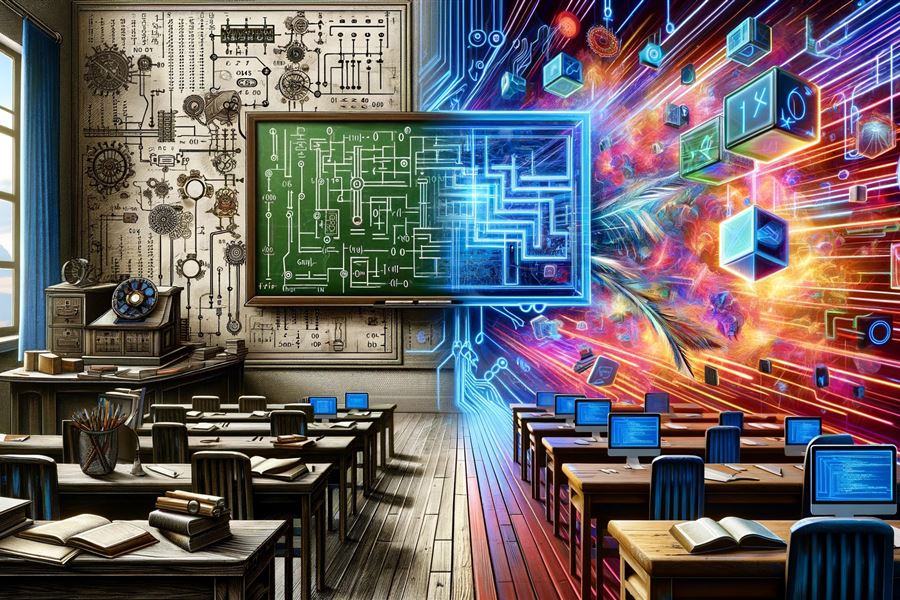

Teach the present first, history later

Tech education should prioritize current technologies and practices before delving into historical development.

-

Scale is a feature

Scaling in software is a feature that requires rethinking architecture, embracing eventual consistency, and utilizing microservices.

-

LGBTQIA+ ally flag in pure CSS

Using only CSS, it's possible to create a flag that represents the LGBTQIA+ ally community.

-

The role of software architects in Agile teams

Software architects are crucial in Agile teams, translating stakeholder needs into quality software with emergent and evolutionary architecture.

-

Evolving legacy software architecture

Sooner or later, we all have to deal with legacy software. In this article, I'll share some of my experience with refactoring and re-architecting.

-

Architectural documentation and communication

Explore techniques for software architecture documentation like knowledge management systems, and personalize strategies to boost team collaboration.

-

C# Dev Kit first impressions

With the C# Dev Kit extension, Visual Studio Code gets a lot of the features that make Visual Studio great for C# development.

-

Designing software architecture for security

Discover the role of'Security by Design in software architecture, using Agile methodologies for proactive threat mitigation and robust cybersecurity.

-

Simplicity in software architecture

Simplicity in software architecture boosts maintainability, reduces bugs, and accelerates development across design, system, and deployment.

-

Implementing software architecture patterns

Exploring four software architecture patterns, the article provides an overview of implementation tactics, to add to your architectural toolbox.